Automated Data Lineage Key Concepts

Automated data lineage uses metadata to trace and visualize data flow across systems—from origin to final destination. It tracks data transformations, dependencies, and system interactions, uncovering how data moves and evolves.

Data lineage bridges the gap between business and technical metadata, linking business terms and definitions with their underlying data elements. Users can search for a business term, understand its meaning, and trace it across various systems, reports, and analytics. Such insights are invaluable for data governance, quality management, root cause analysis, and collaboration between business and technical teams.

This article explores critical components captured by data lineage, the distinction between data and pipeline lineage, and their use cases. We cover the benefits and challenges of automated lineage management and key considerations for implementing an effective automated data lineage platform.

Summary of key automated data lineage concepts

Core components captured by data lineage

When implementing a data lineage solution, the following key components provide a comprehensive view of the data lifecycle in the platform.

Data origin and destination

Understanding where data is created and where it ends up is fundamental to lineage. Data origin could be a raw file, a database, or an external API, while the destination might be a report, dashboard, or another database. Lineage tracks every touchpoint from source to destination. Lineage should also be able to capture any changes to the source and sink by integrating with the underlying system or application.

Data transformation

Data rarely stays in its raw form throughout its lifecycle. Automated lineage traces all data transformations, whether aggregation, filtering, or restructuring. These transformations help us understand how data changes and why it appears in its current form. Transformation goes hand in hand with lineage granularity, where transformation-level detail is captured.

Metadata

Metadata is the descriptive information about the data itself—its schema, type, format, and context. Capturing metadata is essential for maintaining accurate lineage, as it helps describe what data exists and how it relates to other data.

Lineage granularity

Granularity refers to the depth at which lineage is captured—whether at the dataset, table, column, or row level. Higher granularity (like column-level lineage) offers more detailed insights but has higher computational overhead. This can also go as deep as a function or row level for certain compliance use cases wherein each data transform must be recorded.

Some open-source libraries automatically capture different levels of lineage granularity from user code. An example of column-level lineage being captured by Spline on Spark code is shown below.

code is shown below.

from pyspark.sql import SparkSession

from spline import Spline

# Initialize Spark session with Spline

spark = SparkSession.builder \

.appName("Spline Example") \

.config("spark.spline.producer.url", "http://localhost:8080/lineage") \

.getOrCreate()

# Sample DataFrame

data = [(1, "Alice", 500), (2, "Bob", 1500), (3, "Charlie", 200)]

columns = ["id", "name", "price"]

df = spark.createDataFrame(data, columns)

# Transformation: Add price category

df = df.withColumn("price_category",

when(df.price > 1000, "high")

.when(df.price > 100, "medium")

.otherwise("low"))

# Write DataFrame to a sink (e.g., parquet file)

df.write.mode("overwrite").parquet("/path/to/output")

# Stop the Spark session

spark.stop()Column-level lineage capture with Spline (Source)

Dependencies and relationships

Data lineage maps how datasets, applications, and systems rely on one another. Organizations understand the downstream effects of changes in the data pipeline. For instance, updating a data source can have cascading effects on reports or dashboards that depend on it. This requires the lineage system to be integrated into the data pipeline to understand which pipeline updates which datasets.

Versioning and History

Keeping track of historical changes to both data and metadata is crucial for compliance and auditing purposes. Versioning ensures that organizations can trace back to earlier data states, reconstructing flow if an issue arises. This can also be very useful in tracing changes in models and schemas like schema and model drift, which are typically hard to track and pinpoint.

Data flow latency

Tracking not just the path but also the timing of data flows is essential for performance monitoring. Latency insights can reveal bottlenecks in real-time systems and help optimize processing pipelines for faster delivery. This requires the data lineage solution to integrate with the data pipeline orchestration solution.

Data sensitivity and privacy

Automated lineage solutions must incorporate privacy concerns by classifying sensitive data such as Personally Identifiable Information (PII). Understanding how sensitive data moves through the system helps ensure compliance with privacy regulations.

Security and access control

Data lineage should also capture security information, such as access controls and policies. Organizations can better safeguard sensitive information by understanding who can access or modify data at different stages.

Data vs. pipeline lineage

Data lineage tracks the data flow along its journey through the data pipeline. It provides data provenance information to understand data sources and whether they have particular security, privacy, or handling requirements. It shows where the data came from and how it changed; however, it doesn’t include the operational aspects of the data pipeline, such as tracking delayed or failed jobs.

One must complement data lineage with pipeline observability to identify the root cause of data pipeline problems. on the other hand, pipeline lineage, also called operational lineage, tracks data as it moves through live processes, such as ETL jobs, real-time streaming pipelines, or data transformations in cloud systems. Pipeline lineage lets you monitor the entire data journey across the pipeline stages and correlate monitoring data with operational information to pinpoint where issues that impact data quality and reliability arise.

Classification of data lineage types

Data lineage types can be classified by:

- Documentation method (manual vs. automated)

- Technique used (metadata extraction, real-time tracking)

- Stakeholder needs (business vs. technical users)

Each type serves different requirements, from high-level insights for business teams to detailed traces for technical troubleshooting. This alignment ensures lineage supports both strategic and operational goals.

Descriptive data lineage

Historically, data lineage was documented manually, often referred to as descriptive lineage. Static, human-generated documentation was error-prone and required continuous updating as processes evolved. This was mostly stored in entity relationship diagrams and mapping documents.

Design lineage

Design lineage focuses on the intended data flow outlined during the pipeline design phase. It provides a blueprint of how data is supposed to move through systems for validation and governance purposes.

Business lineage

Business lineage connects data elements to their corresponding business terms and definitions. For instance, a "customer ID" used in a technical system can be traced back to the business definition of "customer" in a CRM. This is critical for aligning business rules with technical implementation.

Automated data lineage

Automating data lineage involves specialized processes that trace data changes and transformations throughout its lifecycle and capture the entire lineage in real time. It requires:

- Extracting and integrating lineage details from sources like SQL queries, metadata repositories, and log files.

- Providing data change specifics and visualizations that help stakeholders understand how data has evolved.

Automated lineage ensures that every data journey stage is transparent, accessible, and consistently up-to-date.

Platforms like Pantomath take this further by streamlining data and pipeline lineage extraction, effectively removing the "human in the loop." This approach minimizes errors, enhances accuracy, and frees data engineering resources to focus on business logic and workflow optimization rather than manual lineage tracking. As a result, organizations gain a reliable, real-time view of data flows and transformations. They can ensure data lineage remains current and aligned with evolving business needs.

{{banner-large="/banners"}}

Use cases and benefits of automated data lineage

Data visibility and provenance

Automated data lineage provides complete transparency into data's origin, transformation, and destination. This visibility supports data trust and enhances users’ understanding of how data evolves through various systems.

Enhanced regulatory compliance

Maintaining compliance with data protection and privacy regulations is imperative for organizations in regulated industries. Automated data lineage provides an audit trail detailing the handling and movement of sensitive data throughout its lifecycle. It helps organizations adhere to GDPR, HIPAA, and other regulatory requirements.

Efficient change management

Automated data lineage supports structured change management in data environments. By visualizing the data flow and dependencies, lineage enables teams to assess the downstream effects of schema updates or new transformations before applying them. It reduces the error risk and ensures smoother transitions.

Automated data governance implementation

Automated lineage tools enforce governance policies by tracking how data is accessed and transformed. These tools can identify instances when data is accessed or processed in ways that deviate from organizational policies so data governance teams take corrective actions as needed.

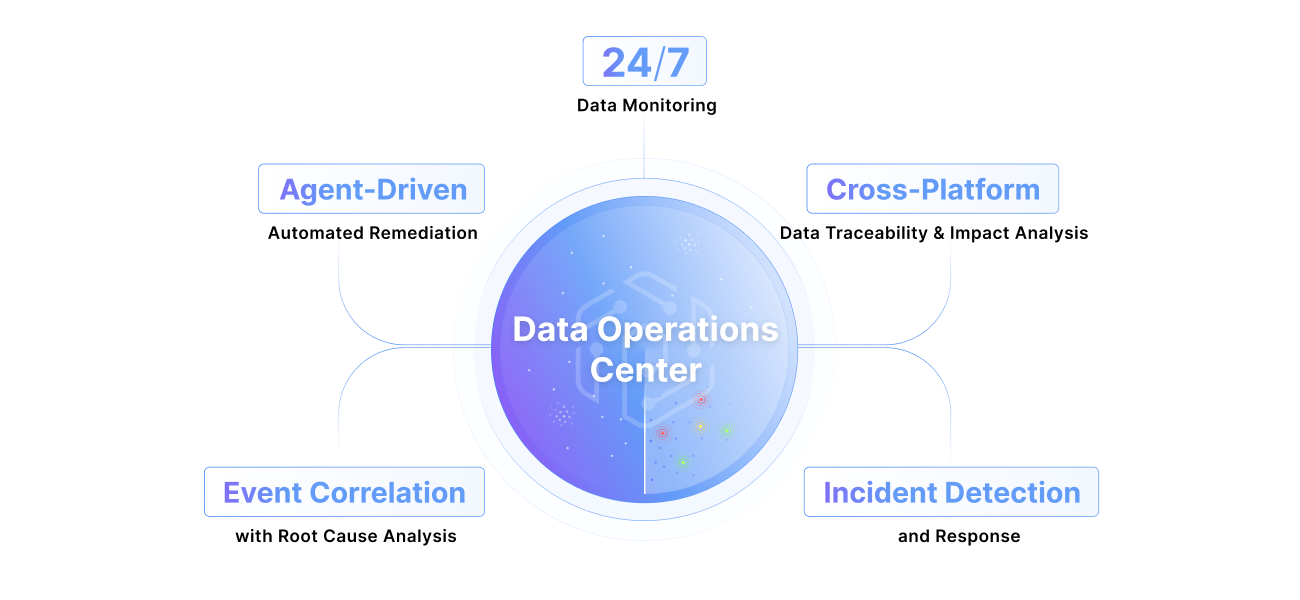

Enhanced use cases with pipeline lineage and traceability

Enhanced functionality emerges when automated data lineage is enriched with pipeline lineage and operational insights. Integration enables data teams to go beyond simple data flow visualization, supporting automated impact and root cause analysis, autonomous data quality management, and incident management. By combining lineage with pipeline traceability and observability, tools like Pantomath reduce the manual intervention typically required to identify and address bottlenecks in complex, interconnected data environments.

Key use cases include:

Clear impact analysis

Automated data lineage helps assess the impact of any changes in data sources or transformations on downstream applications and processes. In complex data ecosystems even minor adjustments can have cascading effects.

Pantomath provides a pipeline impact view that correlates data and pipeline lineage dependencies for intuitive downstream impact analysis. It allows users to see exactly which processes, reports, or datasets may be affected by a particular change.

Automated root-cause analysis (RCA)

When automated data lineage integrates with pipeline observability, it enables automated root-cause analysis (RCA) to pinpoint the exact origin of data issues. For instance, Pantomath combines operational insights with data lineage to provide automated RCA so data engineers can trace anomalies back to failed jobs or delayed processes in real time. Deeper insights minimize downtime by quickly identifying and addressing the source of issues.

Autonomous data quality management

While data lineage itself provides a visual map of data flow, combining it with operational data enables proactive data quality management. Pantomath’s pipeline lineage, for instance, tracks operational anomalies and autonomously flags data quality issues, such as frequent data discrepancies in a transformation step. This autonomy reduces manual monitoring and allows teams to proactively address data quality challenges.

Optimized resource utilization

Through pipeline lineage, organizations gain insights into the efficiency of their data processing pipelines. By identifying bottlenecks and understanding resource usage across transformations and workflows, teams can make informed decisions to optimize infrastructure and resource allocation.

Incident management

Pipeline lineage extends data lineage by tracking job statuses, errors, and process dependencies for incident management workflows. Advanced platforms support incident management through real-time alerts. Engineers can accordingly take corrective measures based on pipeline status, operational logs, and lineage data with the aim of reducing system downtime and improving overall reliability.

By integrating pipeline lineage and operational insights, data lineage can transition from mapping data flows to proactively managing and optimizing data operations. These capabilities make data platforms more resilient, allowing data teams to focus on strategic tasks rather than managing routine incidents and issues.

Key considerations for automated data and pipeline lineage

When designing and implementing automated data and pipeline lineage systems, it’s crucial to account for the differences between static metadata and the evolving nature of systems across a data ecosystem. Building comprehensive lineage solutions requires understanding how to:

- Capture real-time changes

- Manage granular lineage levels

- Integrate with existing governance frameworks.

Below, we discuss critical considerations and gotchas that data engineers and architects should bear in mind.

Automated metadata capture and synchronization

A reliable lineage system requires continuous and automated metadata capture across all databases, ETL pipelines, streaming platforms, and APIs. Synchronization with real-time updates is crucial to ensure lineage accuracy, particularly when sources, destinations, and transformation jobs are constantly changing.

Pantomath provides users with native connectors, which promote seamless integration with pre-existing data stacks. Customers simply have to configure their platform and then connect to Pantomath’s framework.

Also, using the Pantomath SDK, data engineers can efficiently parse logs from orchestration platforms like Astronomer to keep lineage synchronized with real-time job states and orchestrator logs.

from pantomath_sdk import PantomathSDK, AstroTask, S3Bucket, SnowflakePipe

from time import sleep

def main():

# Construct your job

astro_task = AstroTask(

name="astro_task_1",

dag_name="astro_dag_1",

host_name="astro_host_1"

)

# Construct your source and target data sets

source_data_sets = [

S3Bucket(s3_bucket="s3://some-bucket-1/file.csv")

]

target_data_sets = [

SnowflakePipe(

name="snowpipe_1",

schema="snowflake_schema_1",

database="snowflake_database_1",

port=443,

host="snowflake_host_1.example.com",

)

]

# Create an instance of the PantomathSDK

pantomath_sdk = PantomathSDK(api_key="****")

# Capture your job run

job_run = pantomath_sdk.new_job_run(

job=astro_task,

source_data_sets=source_data_sets,

target_data_sets=target_data_sets,

)

job_run.log_start(message="Starting Astro task")

for i in range(5):

job_run.log_progress(

message=f"Completed step {i + 1}",

records_effected=i * 100

)

sleep(2)

job_run.log_success(message="Succeeded!")

if __name__ == '__main__':

main()Automated pipeline runs metadata logging via Pantomath SDK (source)

Lineage granularity tradeoff

Selecting the appropriate level of lineage granularity—whether table, column, row, or function-level—is essential, as finer-grained lineage significantly impacts performance. In regulated industries like banking, capturing row- or function-level lineage becomes necessary to ensure full traceability of data transformations. Data engineers should weigh the performance tradeoffs and consider decoupling lineage extraction and visualization from core data processing to mitigate latency. Specialized lineage calculators or transformation logs track data transformations efficiently, minimizing performance overhead.

Visualization

Interactive, intuitive visualizations are essential for exploring complex data flows. Lineage diagrams should support drill-down views at both high and detailed levels, allowing users to analyze data dependencies across entities. This is especially important for non-technical users who rely on lineage visuals to quickly understand data flows.

Graphical notations like directed acyclic graphs (DAGs) visually represent entity relationships and provide insights into dependencies. For example, a DAG can make identifying dependencies and cyclic redundancies easier, especially useful when managing entity relationships in a large data system.

Cross-system lineage

In distributed environments, automated lineage should trace data across multiple systems on-premises and in the cloud. Cross-system lineage is essential for ensuring a holistic view across heterogeneous architectures. Lineage systems that lack support for specific platforms may require manual data stitching, undermining automation goals.

A robust lineage solution supports all key systems within the data stack to maintain reliability across environments. An example of effective cross-system lineage could involve automated tools that pull metadata from databases, cloud storage, and ETL logs to build a cohesive, multi-platform lineage map.

Scalability and performance

As data volumes increase, lineage systems must scale efficiently to maintain enterprise-wide visibility without impacting operational performance. Capturing more granular lineage details can add processing overhead, so data engineers should carefully balance detail with performance by optimizing lineage capture intervals and methods. Techniques like reading logs directly to parse lineage or leveraging pre-existing DAGs (directed acyclic graphs) from ETL workflows improve scalability by reducing redundant processing. Additionally, incremental updates and change tracking enhance performance by capturing only new or modified lineage information. The system remains responsive as data and complexity grow.

{{banner-small-1="/banners"}}

Last thoughts

Automated data lineage has become indispensable to today’s data platforms, acting as both a roadmap and a safety net for complex data flows. As data forms the backbone of advanced machine learning and AI systems, automating lineage is now a necessity, so data engineers can focus on high-value tasks rather than manual tracking.

Automated data lineage, enhanced with pipeline lineage and observability, provides a dynamic, accurate map of data’s journey across systems, showing every transformation, integration, and touchpoint in real-time. This integration transforms lineage into a proactive tool for issue diagnosis, quality assurance, and operational optimization. Like a GPS for data, it guides teams with precision and empowers organizations to make more informed, reliable data-driven decisions. The result is a more transparent, accountable, and resilient data ecosystem that adapts to change and drives efficiency across the entire data lifecycle.