Data Lineage Diagram Techniques and Best Practices

A data lineage diagram is a visual representation that traces the data flow from its source to destination as it traverses various transformation and analysis stages along its journey. Before exploring real-world examples, let’s illustrate the concept using the following simplified diagram.

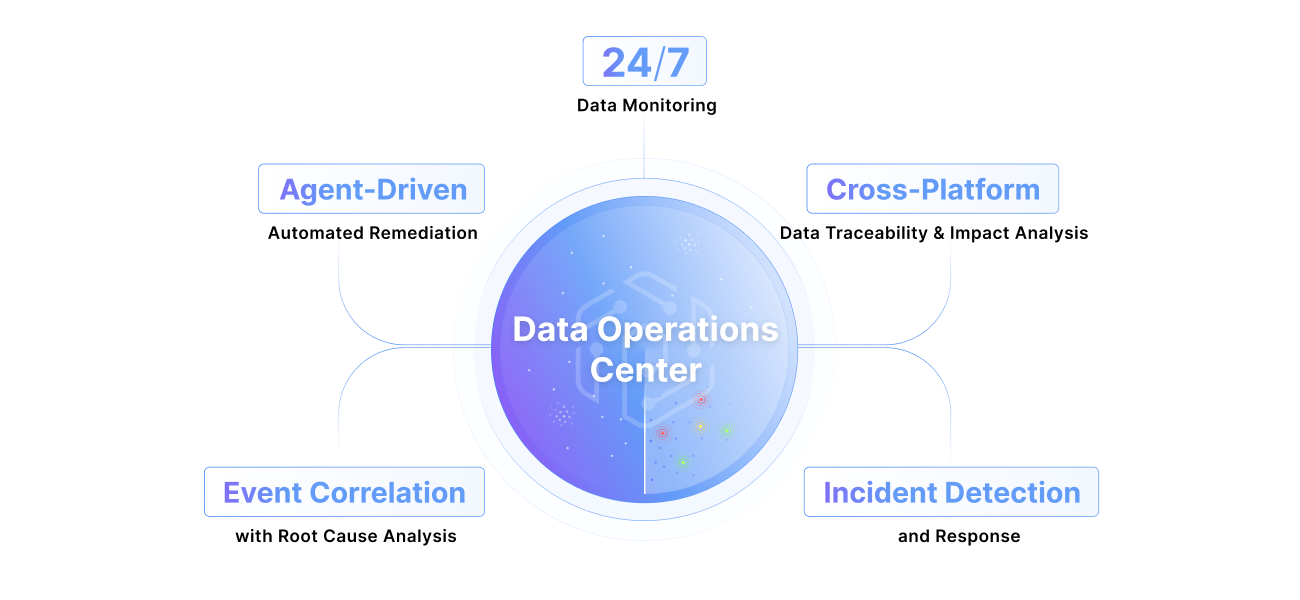

Data lineage is often used to determine data provenance, ensure data privacy and regulatory compliance, or conduct what-if analysis before updating a schema. However, one of the most powerful uses of data lineage is combining it with pipeline traceability (think of monitoring delayed and failed jobs) to help data engineers isolate the reason behind missing, inaccurate, or delayed data by automating the steps involved in the root cause analysis process. This approach is especially valuable in complex environments that rely on multiple commercial and open-source products to transform, analyze, and present data.

Automated data lineage tools use various sources to map out data flows and transformations, including metadata repositories, SQL queries, log files, API calls, data catalogs, source code, database execution plans, schema information, and configuration files.

This article explores the role of data lineage diagrams in modern data management. It introduces the concept of the data journey, highlights common pitfalls, provides sample diagrams, and presents tools designed to automate the process of creating data lineage diagrams in the context of data pipeline operations.

Summary of key data lineage diagram concepts

#1 Data journey

The data journey refers to the comprehensive path data takes through a pipeline, beginning from its initial source and culminating in actionable insights in a report. The diagram below shows one such journey.

This journey involves several stages. First, data generation at the source collects raw data from various inputs such as databases, APIs, sensors, and logs. Next, data ingestion involves importing data into the system using batch processing, real-time streaming, or ETL processes. This stage must manage data latency, completeness, and integration issues.

Following ingestion, intermediate storage temporarily holds data in staging areas or data lakes, where data security and consistency are paramount. Data transformation then cleans, enriches, normalizes, and aggregates the data for analysis. This stage must address quality issues and complex transformation logic.

Data integration combines data from different sources into a unified dataset, ensuring consistency and managing schema changes. In the final storage stage, the transformed data is stored in data warehouses or databases for analysis, balancing storage costs and performance. Data utilization involves analyzing the data with BI tools, generating reports, and creating dashboards.

Finally, archiving and deletion manage long-term storage or compliance-driven data deletion, while data governance and monitoring ensure policies, quality, and regulatory compliance are maintained throughout the journey.

Each stage ensures data is processed, cleansed, and prepared for its ultimate use in decision-making and reporting.

Understanding the data journey is relevant for several reasons, each contributing to effective data management and utilization:

#2 Data context

In data engineering, upstream dependencies refer to data sources and processes that feed data into a particular stage or system. Conversely, downstream dependencies are the systems and processes that rely on the output of that stage or system. Understanding these dependencies is required for effectively managing the data pipeline.

Changes in upstream processes can significantly impact downstream systems, and Data Engineers must carefully analyze any disruptions or modifications to prevent cascading issues.

However, to extract more value from data lineage diagrams, it is necessary to have more context of the entities involved, such as tracking the individuals responsible for each data source or segment of the data pipeline. Data ownership refers to the accountability and responsibility assigned to individuals or teams for specific data assets within an organization.

Data owners ensure data quality through regular monitoring and auditing, implementing data quality standards, ensuring data security, and managing access permissions. They use data profiling tools to detect and correct inconsistencies and establish validation rules and cleansing protocols. Despite these efforts, data owners face challenges such as managing increasing data volume and complexity, ensuring consistent data governance across departments, and accessibility with security.

Data lineage diagrams help inform about data owners and entity dependencies by clearly delineating who is responsible for each part of the data pipeline. They can include annotations or metadata that specify the data owner for each dataset or process, ensuring clarity on who to contact for issues or changes.

For example, this ownership tracking would allow a sales team to identify dependencies on customer data for generating sales reports. If the marketing team fails to maintain high data quality, inaccuracies or issues in the customer data can lead to flawed sales reports, negatively impacting business decisions and strategy.

{{banner-large="/banners"}}

#3 Data scope

Besides ownership, data lineage diagrams can vary in scope. The data journey is composed of multiple components, explained below.

These entities interact in a complex, interconnected manner. Data sources, such as databases and APIs, generate raw data that flows into ingestion jobs. These ingestion jobs, including batch processing and real-time streaming, transfer data to intermediate storage solutions like data lakes and warehouses. From there, data undergoes transformation processes, which include cleaning, aggregation, and enrichment. The transformed data is then integrated and stored in final repositories for analysis. Each step depends on the previous ones, creating a chain of dependencies that ensures data is accurately processed and available for insights.

This complexity makes it difficult to isolate the root cause of a problem when a component malfunctions, which is why data lineage diagrams must consider the operational aspect of the data pipeline, such as tracking the jobs and processes through the pipeline and correlating it with the data lineage diagrams to automate the troubleshooting process.

#4 Data source integrations

Automated integrations leverage various sources of information across many tools to create data lineage maps. The sources include:

- Metadata repositories: Scan and analyze metadata from various systems

- SQL queries and scripts: Parse SQL statements and ETL scripts

- Log files: Index and search application, database, and system logs

- API calls and integrations: Examine API usage at integration points

- Data catalogs: Access enterprise data catalogs

- Code repositories: Analyze application code’s data processing logic

- Database query or execution plans: Grok SELECT statements and table joins

- Schema information: Determine relationships between tables and fields

- Data profiling results: Reveal patterns and relationships

- Configuration files: Gather information about data sources and transformations

The tools that automatically generate a data lineage diagram must be integrated with all the tools used along the data journey (like Fivetran, DBT, Spark, or Tableau) to access the required meta-data used to establish the dependencies. That's why it’s important for the tool you choose to have many out-of-the-box integrations.

For example, Pantomath's data lineage diagrams use native integrations with popular tools with significant market share to automatically discover the relationships between data entities and provide additional context, such as the health of data pipelines, data ownership, and relevant metadata.

To derive optimal value, automated data lineage diagrams should be coupled with pipeline traceability to correlate delayed or missing data cases with the status of jobs and processes operating on the data to isolate the root cause.

To illustrate this functionality, take the example in the screenshot below from the Pantomath product. The hr_erl Fivetran connector ingestion job has a latency incident due to an extended run time. This latency incident could potentially impact five additional data assets across multiple pipelines.

#5 Data operations and troubleshooting

Traditional data lineage tools often lack collaboration features, allowing only the data architecture to access them. This leads to isolated workflows and miscommunication. Modern data lineage tools address this by offering features for creating, sharing, and editing diagrams across teams.

Collaborative and automated troubleshooting tools enhance the ability of data teams to work together, improving communication, fostering faster decision-making, and ensuring better alignment between technical and business teams.

Pantomath pioneered combining the five pillars of data observability into a single platform, including data lineage and pipeline traceability, root cause analysis, alerting, and ticketing to foster collaboration.

Let’s quickly review the five pillars of data observability.

The screenshot below is an example of how the five pillars correlate the data lineage with the operational steps in the data pipeline to detect a problem, isolate the root cause, alert the operations team, open a ticket, and enable collaboration with message exchanges.

The table below presents examples of scenarios where data engineering teams can benefit from the solutions described above in different scenarios.

{{banner-small-1="/banners"}}

Last thoughts

Data lineage diagrams combined with pipeline traceability powered by native integrations with data warehouses, data lakes, data transformations, and data visualization tools enable

- visualizing the data journey from source to insights,

- mapping upstream and downstream dependencies and data ownership,

- enhancing data governance and compliance efforts, and

- automated root cause analysis and data operations collaboration.

Contact a data expert to learn how Pantomath can help your data operations.